- Explore My Collection of Essential AI Websites

- Posts

- Decoding AI Bias — How Fair is Artificial Intelligence, Really Deepthink AI Newsletter

Decoding AI Bias — How Fair is Artificial Intelligence, Really Deepthink AI Newsletter

AI is everywhere, but fairness isn’t. From hiring tools to facial recognition, biased data is silently shaping biased outcomes. In this edition, we uncover how AI bias happens, where it hides, and what we can do to build more ethical and fair systems.

Artificial Intelligence is everywhere — from recommending your next movie to screening resumes for your dream job. But here’s the uncomfortable truth: AI isn’t neutral.

It reflects the data it was trained on — which often means it reflects human bias.

⚫ So the real question isn’t “Can AI make decisions?”

⚫ It’s “Can AI make fair decisions?”

🔍 What is AI Bias?

AI bias occurs when an AI system produces unfair, prejudiced, or skewed results due to the way it was trained or the data it was fed.

Think of it like this:

Garbage in, garbage out.

If your training data is biased, your AI’s output will be too.

Where Does This Bias Come From?

Source of Bias | Example |

|---|---|

Biased training data | Facial recognition systems that misidentify people with darker skin tones |

Labeling bias | Humans tagging resumes or photos differently based on personal stereotypes |

Sampling bias | Underrepresentation of certain groups in datasets |

Algorithm design choices | Prioritizing speed over fairness in hiring models |

Feedback loop bias | Recommender systems reinforcing existing trends or prejudices |

Real-World Examples of Biased AI

Amazon’s Hiring Algorithm

It favored male candidates because it was trained on past hiring data — which had more male applicants.Facial Recognition Failures

Several systems showed higher error rates for women and people of color, leading to wrongful arrests in some U.S. cities.Healthcare Algorithms

AI once predicted white patients needed more care than Black patients with the same health profile — due to historical disparities in healthcare access.

⚖️ Why AI Bias Matters

Trust & Ethics: Users won’t adopt tech they can’t trust.

Legal Risks: Biased AI can violate discrimination laws.

Business Impact: Bad decisions = lost customers, bad PR, and regulatory trouble.

Social Responsibility: Tech should reduce inequality — not amplify it.

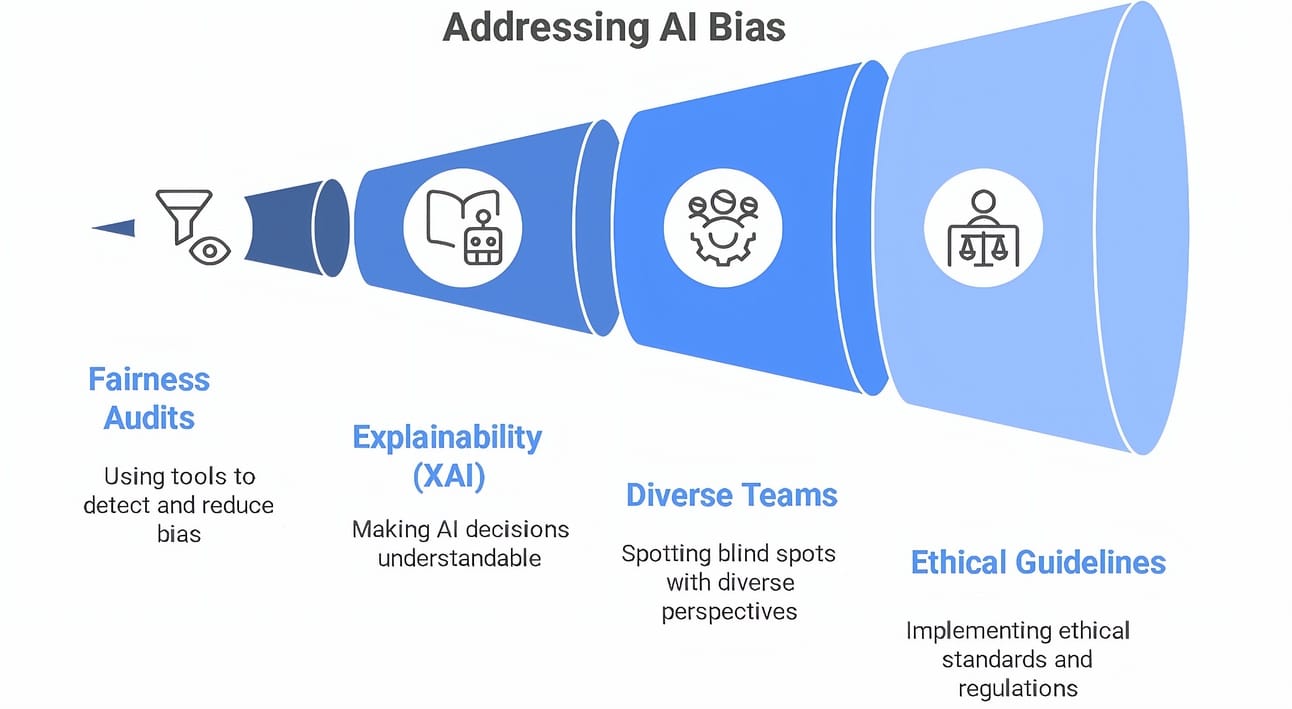

💡 What’s Being Done About It?

Governments, researchers, and companies are waking up. Some solutions include:

Fairness Audits: Tools like IBM’s AI Fairness 360 to detect and reduce bias.

Explainability (XAI): Making AI decisions understandable.

Diverse Teams: Diverse engineers spot blind spots others may miss.

Ethical Guidelines: EU AI Act, OECD AI Principles, etc.

🔧 What Can You Do (as a Founder, Creator, or Learner)?

Ask Questions: Where did this data come from? Is it diverse?

Build Transparent AI: Show users how the model works (even if simplified).

Monitor Outputs: Constantly check how your model behaves in the real world.

Stay Updated: AI ethics is evolving fast — so keep learning.

🛠️ Tools You Can Explore

IBM AI Fairness 360

Google’s What-If Tool

Fairlearn (Microsoft)

SHAP & LIME (Model Explainability)

💬 Final Thought

AI won’t solve our human flaws.

But if we build with awareness + intention, we can shape a future where technology uplifts — not divides.

Bias isn’t a bug in AI.

It’s a reflection of us. Let’s make sure it reflects our best, not our worst.

✍️ Drop your thoughts below — ever faced bias in AI tools? Or have a solution idea?

Your voice helps shape the ethical AI of tomorrow.

Stay mindful,

Deepthink

Reply